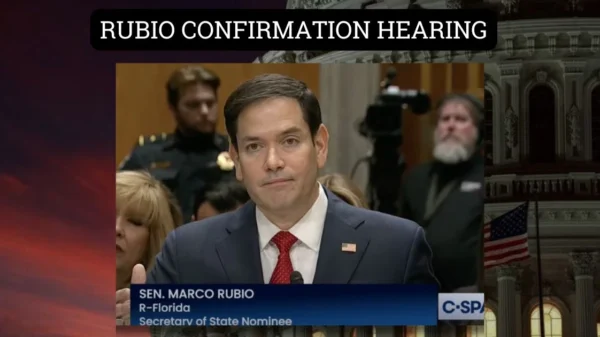

Last week, U.S. Sen. Marco Rubio, R-Fla., the vice chairman of the U.S. Senate Select Committee on Intelligence, delivered opening remarks at a hearing on the intersection of artificial intelligence (AI) and national security.

Rubio’s comments are below.

This whole issue is fascinating to me, because the story of humanity is the story of technological advances, from the very beginning, in every civilization and culture. There’s positives in every technological advance, and there’s negatives that come embedded in it. Generally, technological advances have allowed human beings to do what humans do, but faster, more efficiently, and more productively, more accurately. Technological advances have, in essence, allowed humans to be better at what humans do. I think what scares people about this technology is the belief that it not simply holds the promise of making us better, but the threat of potentially replacing the human being, able to do what humans do without the human.

One of the things that’s interesting is that we’ve been interacting with AI, or at least models of AI and applications of it, in ways we don’t fully understand, from estimating how long it’s going to take to get from point A to point B, and which is the fastest route based on their predicted traffic patterns at that time of day, to every time you say “Alexa” or “Siri.” All of these are somewhat built into learning models. But now we get into the application of machine learning, where you’re basically taking data, and you’re now issuing recommendations of a predictive nature. That’s what we understand now. But there is deeper learning that actually seeks to mimic the way the human brain works. Not only can it take in things beyond text, like images, but it can, in essence, learn from itself and take on a life of its own. All of that is happening. And frankly, I don’t know how we hold it back….

How do you regulate a technology that is transnational, that knows no borders, and that we don’t have a monopoly on? We may have a lead on it, but some of the applications of AI are going to be pretty common, for purposes of what some nefarious actor might use….

Will we ever reach a point, and this is the one that to me is most troubling, where we can afford to limit it? As an example, [imagine] we are in a war, God forbid, with an AI general on the other side. Can we afford to limit ourselves in a way that keeps pace with the speed and potentially the accuracy of the decision-making of a machine on that end? The same is true in the business world when we get AI CEOs making decisions about where companies invest. There comes a point where you start asking yourself, can we afford to limit ourselves, despite the downside of some of this? It’s something we haven’t thought through….

Globalization and automation have been deeply disruptive to societies and cultures all over the world. We have seen what that means in displacing people from work and what it does to society and the resentments it creates. I think this has the potential to do that by times infinity. How disruptive this will be, the industries that it will fundamentally change, the jobs that it will destroy and perhaps replace with different jobs, but the displacement it could create – that has national security implications, [as does] what happens in the rest of the world and in some of the geopolitical trends that we see. I think it has the threat of reaching professions that up to this point have been either insulated or protected from technological advances because of their education level…. Look at the strike in Hollywood – a part of that is driven by the fear that the screenwriters and maybe even the actors will be replaced by artificial intelligence. Imagine that applied to multiple other industries and what that would mean for the world and for its economics.

There’s a lot to unpack here. But the one point that I really want to focus in on is, we may want to place these limits, and we may very well be in favor of them from a moral standpoint, but can we ever find ourselves at a disadvantage facing an adversary who did not place those limits and is therefore able to make decisions in real time at a speed and precision that we simply cannot match because of the limits we put on ourselves? That may still be the right thing to do. But I do worry about those implications.